Python is useful for data scientists, especially with pyspark, but it’s a big problem to sysadmins, they will install python 2.7+ and spark and numpy,scipy,sklearn,pandas on each node, well, because Cloudera said that. Wow, imaging this, You have a cluster with 1000+ nodes or even 5000+ nodes, although you are good at DevOPS tools such as puppet, fabric, this work still cost lot of time. Continue reading Integrate pyspark and sklearn with distributed parallel running on YARN

Monthly Archives: 7 月 2017

Spark read LZO file error in Zeppelin

Due to our dear stingy Party A said they will add not any nodes to the cluster, so we must compress the data to reduce disk consumption. Actually I like LZ4, it’s natively supported by hadoop, and the compress/decompress speed is good enough, compress ratio is better than LZO. But, I must choose LZO finally, no reason.

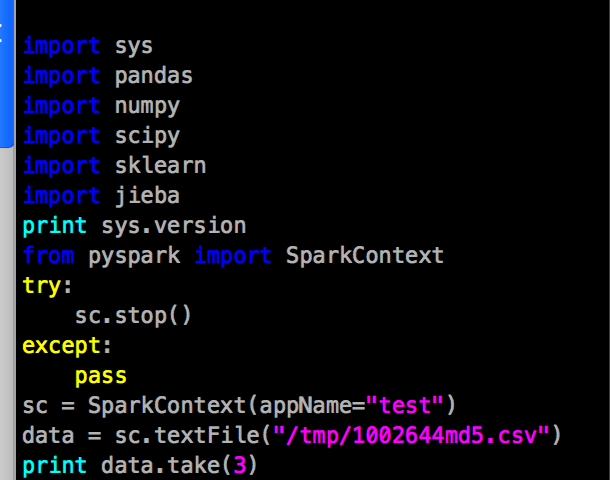

Well, since we use Cloudera Manager to install Hadoop and Spark, so it’s no error when read lzo file in command line, simply use as text file, Ex:

val data = sc.textFile("/user/dmp/miaozhen/ott/MZN_OTT_20170101131042_0000_ott.lzo")

data.take(3)

But in zeppelin, it will told me: native-lzo library not available, WTF?

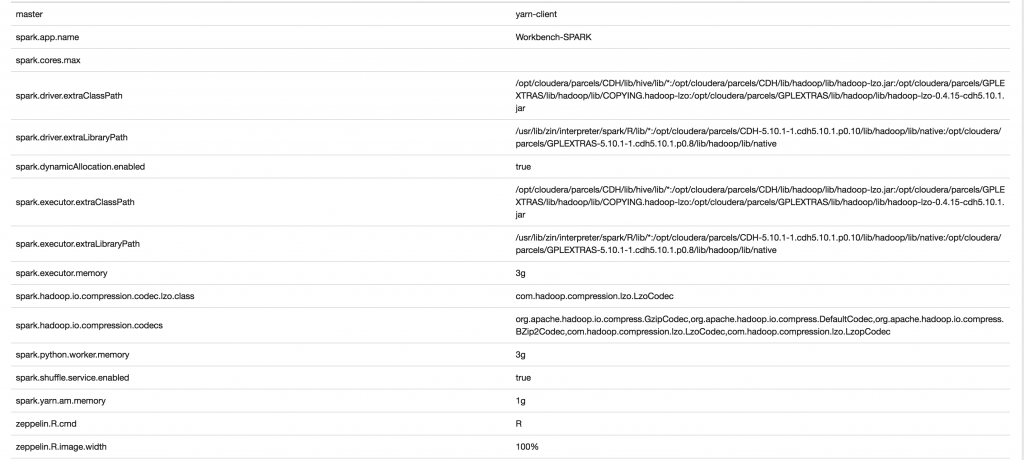

Well, Zeppelin is a self-run environment, it will read its configuration only, do not read any other configs, Ex: it will not try to read /etc/spark/conf/spark-defaults.conf . So I must wrote all spark config such as you wrote them in spark-deafults.conf.

In our cluster, the Zeppelin conf looks like this: