I created a secured Hadoop cluster for P&G with cloudera manager, and this document is to record how to enable kerberos secured cluster with cloudera manager. Firstly we should have a cluster that contains kerberos KDC and kerberos clients

- Install KDC server

Only one server run this, note, kdc is only install on a single serversudo yum -y install krb5-server krb5-libs krb5-workstation krb5-auth-dialog openldap-clients

This command will install Kerberos Server and some useful commands from krb5-workstation

- Modify /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults] kdc_ports = 88 kdc_tcp_ports = 88 [realms] PG.COM = { #master_key_type = aes256-cts acl_file = /var/kerberos/krb5kdc/kadm5.acl dict_file = /usr/share/dict/words admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal max_renewable_life = 7d }PG.COM used to be EXAMPLE.COM

and add max_renewable_life = 7d or more longer than this, 4w means 4 weeks - Modify /etc/krb5.conf

[libdefaults] default_realm = PG.COM dns_lookup_kdc = false dns_lookup_realm = false ticket_lifetime = 259200 renew_lifetime = 604800 forwardable = true default_tgs_enctypes = rc4-hmac default_tkt_enctypes = rc4-hmac permitted_enctypes = rc4-hmac udp_preference_limit = 1 kdc_timeout = 3000 [realms] PG.COM = { kdc = pg-dmp-master2.hadoop admin_server = pg-dmp-master2.hadoop }EXAMPLE.COM to PG.COM. And in [realms] area, kdc point to node where I install KDC and admin_server point to server where installed kadmin

- Create the realm on KDC server

# kdb5_util create -s -r PG.COM

This will create the working realm named PG.COM

- Create the admin user principle in PG.COM on KDC server

# kadmin.local -q "addprinc root/admin"

I use root/admin as an admin user, you can type in a different password for this admin, note, root/admin is not same as root, they can keep in kerberos’s database either, and they are two different account.

- Edit /var/kerberos/kadm.acl

*/admin@PG.COM

This will define who are admins in PG.COM realm, this means everyone with /admin@PG.COM could be kerberos admin.

- Check all the kerberos’s configure files, ensure there are no errors.

/etc/krb5.conf

/var/kerberos/krb5kdc/krb.conf

/var/kerberos/krb5kdc/kadm.acl - And now start KDC and Kadmin service on KDC server

# service krb5kdc start # service kadmin start

This will start KDC and Kadmin service on KDC server

- Login to all other nodes of this cluster, and run this

# yum install krb5-workstation krb5-libs krb5-auth-dialog openldap-clients cyrus-sasl-plain cyrus-sasl-gssapi

Installing kerberos clients on all nodes

And then, back to Cloudera Manager , click Cluster->Operation, and find enable kerberos in dropdown menu. Then enable all the question, and Next->Next->Next… till it ends.

When the all procedure done, you can use kerberos secured hadoop cluster.

So there were some more questions when installed kerberos secured cluster.

How could I add a new user principle?

# kadmin.local -q "addprinc username@PG.COM"

And the note is , when you add a common user to the cluster, you should run this command above on Kadmin(KDC) server, and username@PG.COM is a normal user in kerberos, if you want to add an admin user, you should use username/admin@PG.COM, the /admin is which you defined in kadm.acl

How should I administrating HDFS or YARN, I mean, how to use hdfs user or yarn user?

Well, since cloudera manager will automatically create several users in cluster such as hdfs, yarn, hbase… and these users were all defined as nologin without any password. And these users are all admin users of Hadoop but not in kerberos database. So you can’t ask ticket like this.

# kinit hdfs kinit: Client not found in Kerberos database while getting initial credentials # kinit hdfs@PG.COM kinit: Client not found in Kerberos database while getting initial credentials

But you can ask ticket by using keytabs of these cluster users like this

kinit -kt hdfs.keytab hdfs/current_server@PG.COM

current_server is the hostname of your current logged in server and wants to access with HDFS or YARN such as a hadoop client, in cluster, hdfs.keytab are all different on each node. so you must write command like this above.

When I run a smoke test like Pi, it gone wrong? I’ve already add a new user principle in KDC database.

17/04/24 11:18:35 INFO mapreduce.Job: Job job_1493003216756_0004 running in uber mode : false

17/04/24 11:18:35 INFO mapreduce.Job: map 0% reduce 0%

17/04/24 11:18:35 INFO mapreduce.Job: Job job_1493003216756_0004 failed with state FAILED due to: Application application_1493003216756_0004 failed 2 times due to AM Container for appattempt_1493003216756_0004_000002 exited with exitCode: -1000

For more detailed output, check application tracking page:http://pg-dmp-master1.hadoop:8088/proxy/application_1493003216756_0004/Then, click on links to logs of each attempt.

Diagnostics: Application application_1493003216756_0004 initialization failed (exitCode=255) with output: main : command provided 0

main : run as user is dmp

main : requested yarn user is dmp

User dmp not found

Failing this attempt. Failing the application.

17/04/24 11:18:35 INFO mapreduce.Job: Counters: 0

Job Finished in 1.094 seconds

java.io.FileNotFoundException: File does not exist: hdfs://PG-dmp-HA/user/dmp/QuasiMonteCarlo_1493003913376_864529226/out/reduce-out

at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1257)

at org.apache.hadoop.hdfs.DistributedFileSystem$20.doCall(DistributedFileSystem.java:1249)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1249)

at org.apache.hadoop.io.SequenceFile$Reader.<init>(SequenceFile.java:1817)

at org.apache.hadoop.io.SequenceFile$Reader.<init>(SequenceFile.java:1841)

at org.apache.hadoop.examples.QuasiMonteCarlo.estimatePi(QuasiMonteCarlo.java:314)

at org.apache.hadoop.examples.QuasiMonteCarlo.run(QuasiMonteCarlo.java:354)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.examples.QuasiMonteCarlo.main(QuasiMonteCarlo.java:363)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.util.ProgramDriver$ProgramDescription.invoke(ProgramDriver.java:71)

at org.apache.hadoop.util.ProgramDriver.run(ProgramDriver.java:144)

at org.apache.hadoop.examples.ExampleDriver.main(ExampleDriver.java:74)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

How I can create a user keytab for submit job only instead using hdfs/yarn/mapred users?

# kadmin kadmin: xst -k dmp.keytab dmp@PG.COM # ktutil ktutil: rkt dmp.keytab ktutil: wkt dmp-2.keytab ktutil: clear # kinit -k -t dmp-2.keytab dmp@PG.COM # hadoop fs -ls / ...

And you can use this keytab file in JAAS for mapreduce job submit. Could ktutil be omitted? I’ll try it later.

In an unsecured cluster, hadoop will distibuted all the containers to nodes and start the containers as an exists username such as yarn. But in a secured cluster, job’s containers will be distributed and run as the username who you submitted it. So, this error is you add a principle only, but there were no such a user on every node. So the container executor can not start up the application master or mapper or reducer. And you must add this user to each node to solve this problem. Simply use linux command

# useradd dmp

On every node in cluster and client, another express way to add user to all nodes is by using openldap to instead add user manually.

Finally we should talk about some basic theory of kerberos

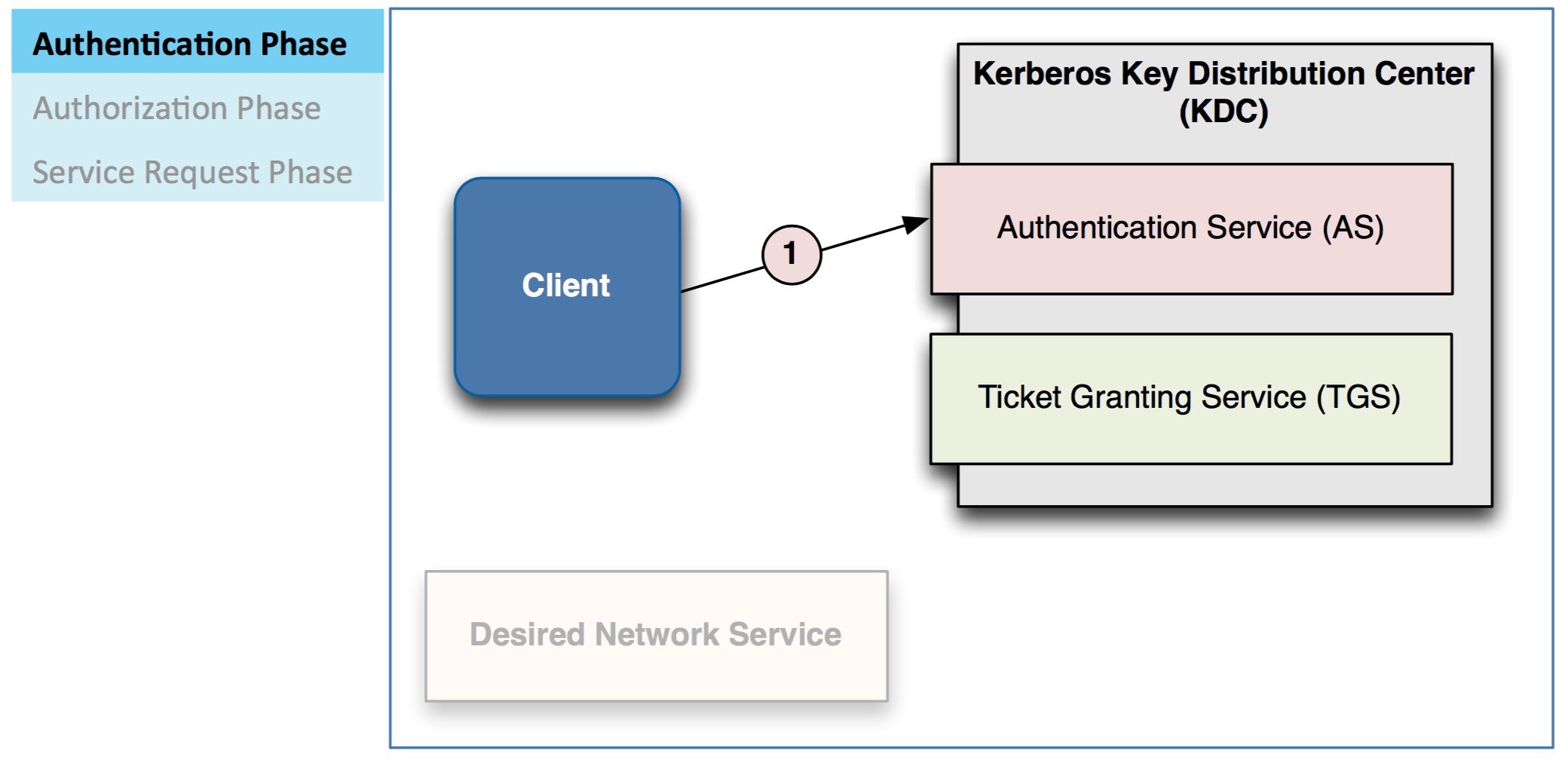

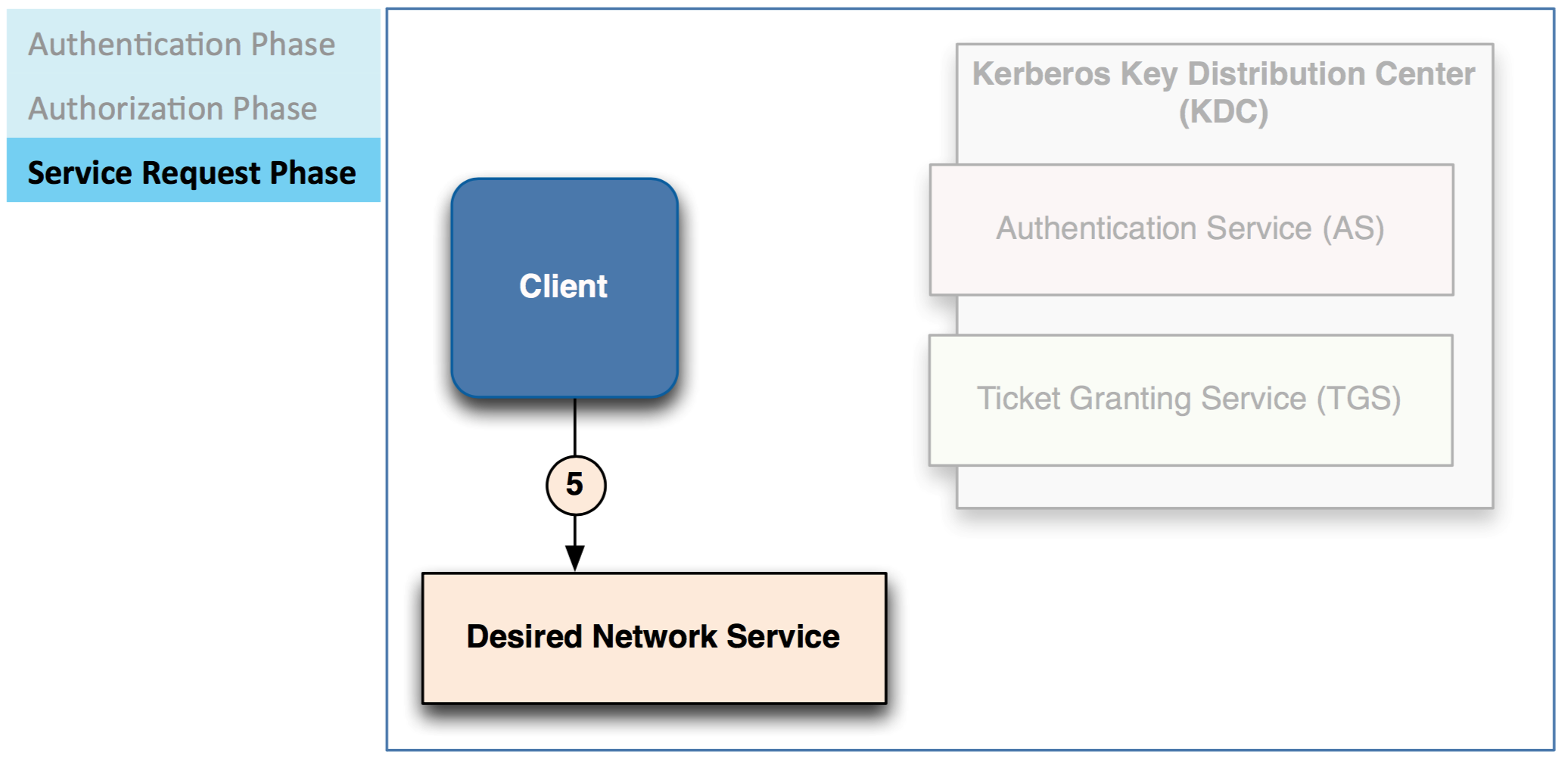

There are three phases required for a client to access a service

– Authentication

– Authorization

– Service request

Client sends a Ticket-Granting Ticket (TGT) request to AS

AS checks database to authen2cate client

– Authentication typically done by checking LDAP/Active Directory

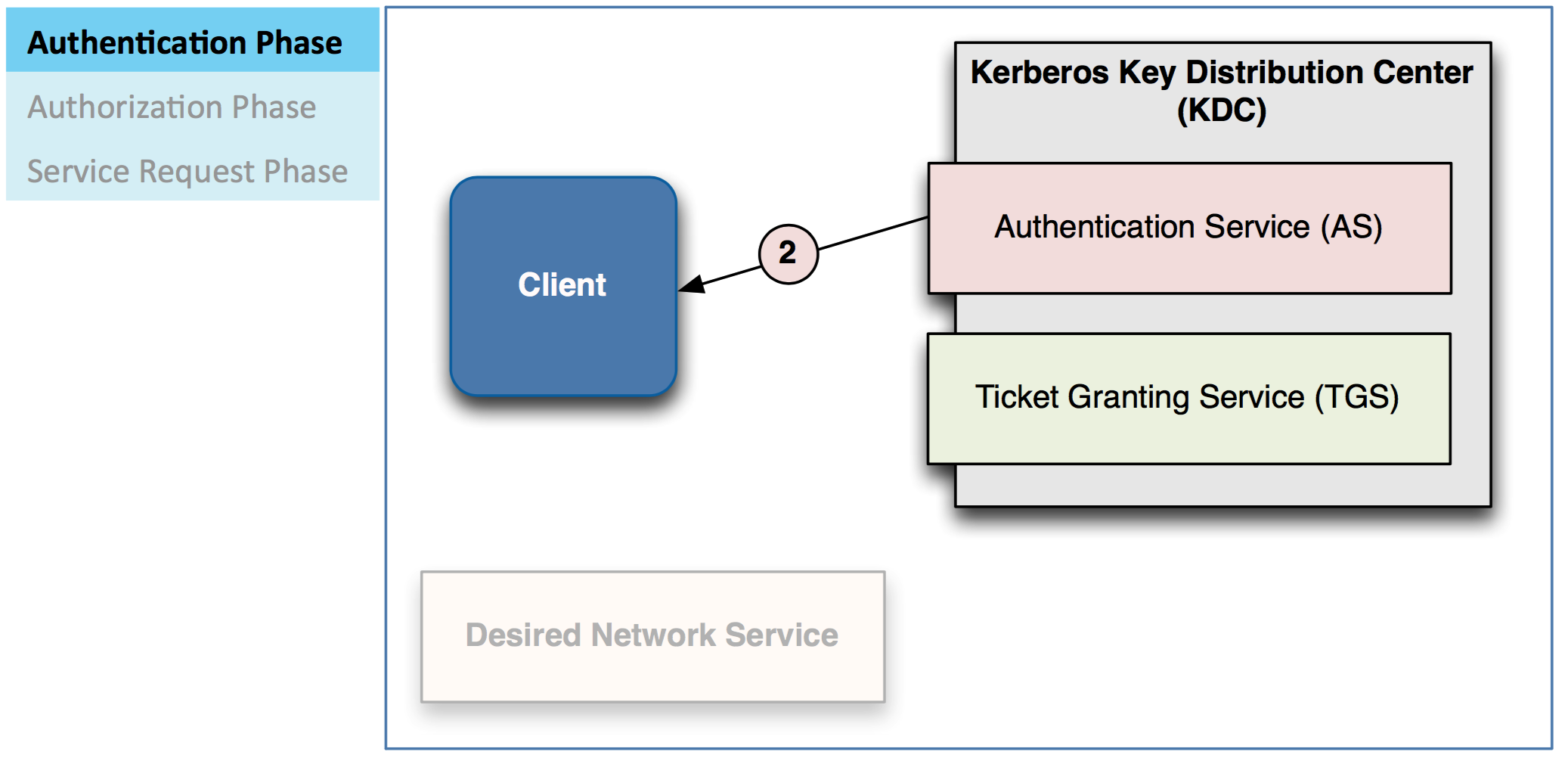

– If valid, AS sends Ticket/Granting Ticket (TGT) to client

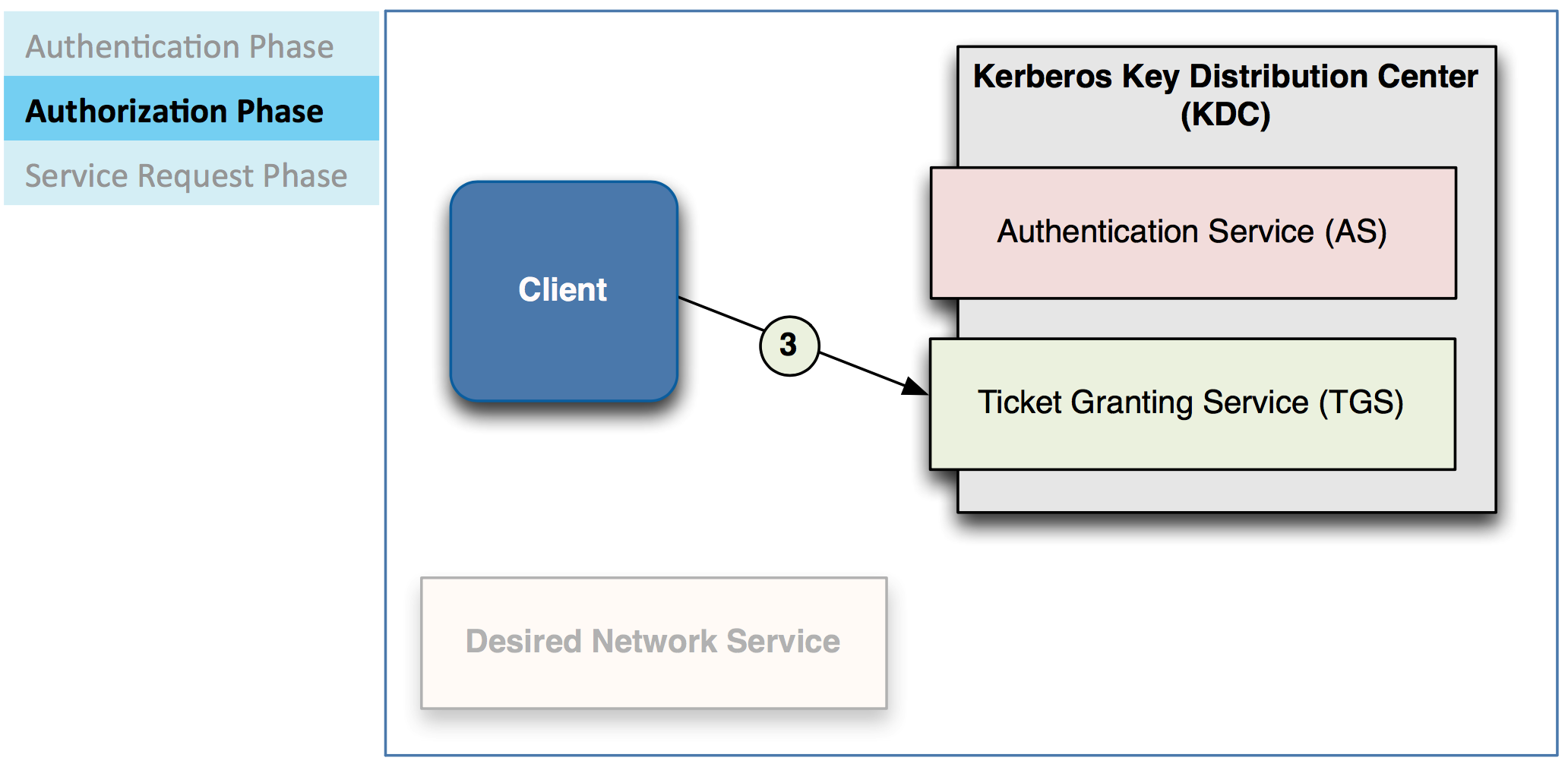

Client uses this TGT to request a service ticket from TGS

– A service Bcket is validation that a client can access a service

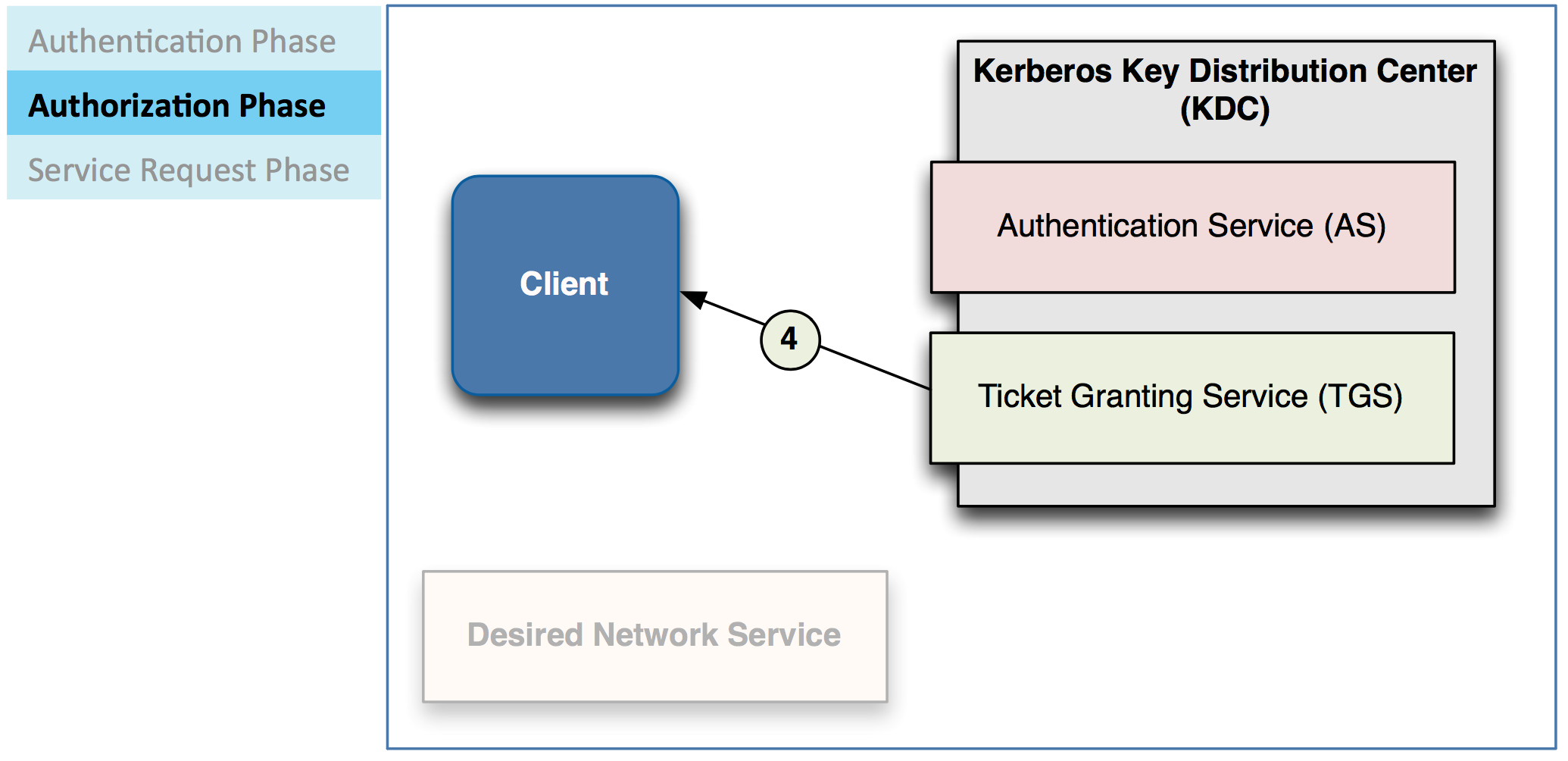

TGS verifies whether client is permitted to use requested service

– If access granted, TGS sends service Bcket to client

Client can use then use the service

– Service can validate client with info from the service ticket

kinit program is used to obtain a ticket from Kerberos

klist to see users in kerberos database

kdestroy to explicitly delete your ticket

( Pics and their comments are reffered from Cloudera Administrator Training Course, copyright to cloudera.com )