Since I didn’t spend much time on hadoop and spark maintaince, our first party would like to use jupyterhub/lab on hadoop, and their cluster was enhanced security with kerberos and hdfs encrypti[...]

DS means Data Scientist? NO!

These “Data Scientist” in our dear first party, can hold all my jokes of this year. (Update at any time) DS: Why my spark job takes so much time? Me: ? DS: Me: No, It’s a resident [...]

jupyterlab and pyspark2 integration in 1 minute

As we use CDH 5.14.0 on our hadoop cluster, the highest spark version to be support is 2.1.3, so this blog is to record the procedure of how I install pyspark-2.1.3 and integrate it with jupyter-lab. [...]

自己动手打造ipv6梯子

以下内容适合有一定网络及梯子搭建经验的人士阅读。 准备工作:Vultr美服主机一台,以下简称HAA,创建时选择同时支持IPv6和v4,最低价到20181212为止是3.5刀,选择位置靠近西海岸,Seattle,LA,均可,速度会比较好,自家网络测试觉得硅谷速度一般。 Vultr 欧服Paris,Amsterdam,Frankfurt均可,数量自定,选择IPv6 only,价格2.5刀[...]

Using py-SparkSQL2 in Zeppelin to query hdfs encryption data

%spark2_1.pyspark from pyspark.sql import SQLContext from pyspark.sql import HiveContext, Row from pyspark.sql.types import * import pandas as pd import pyspark.sql.functions as F trial_pps_or[...]

Kerberos Master/Slave HA configuration

Since we only have one KDC on our cluster, it will be an SPOF (Single Point of Failure), so I have to create a Master/Slave KDC to avoid this problem. There would be some steps to convert SP to HA. De[...]

Use encrypted password in zeppelin and some other security shit

For security reason, we cannot expose user password in zeppelin, so we must write down encrypted password into shiro.ini, so how to enable encrypt passwd in zeppelin? (更多…)

Enable HTTPS access in Zeppelin

I was using certified key file to enable HTTPS, if you use self-signatured key, see second part First part: I had got two files which one is the private key named server.key and another one is certif[...]

How to use cloudera parcels manually

Cloudera Parcel is actually a compressed file format, it just a tgz file with some meta info, so we can simply untar it with command tar zxf xxx.parcel. So we have the capability to extract multi ver[...]

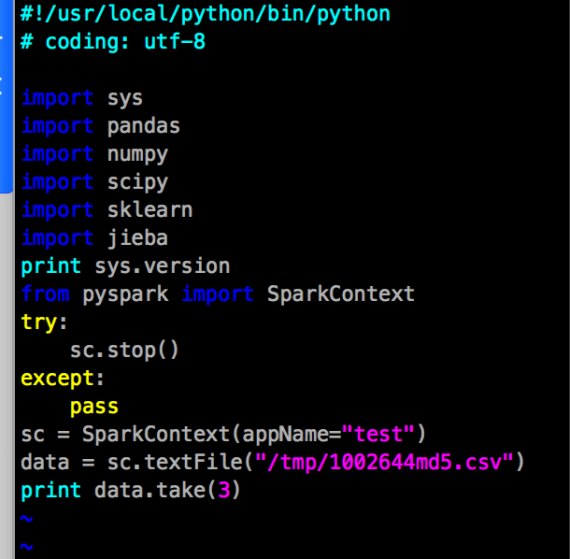

Integrate pyspark and sklearn with distributed parallel running on YARN

Python is useful for data scientists, especially with pyspark, but it’s a big problem to sysadmins, they will install python 2.7+ and spark and numpy,scipy,sklearn,pandas on each node, well, bec[...]